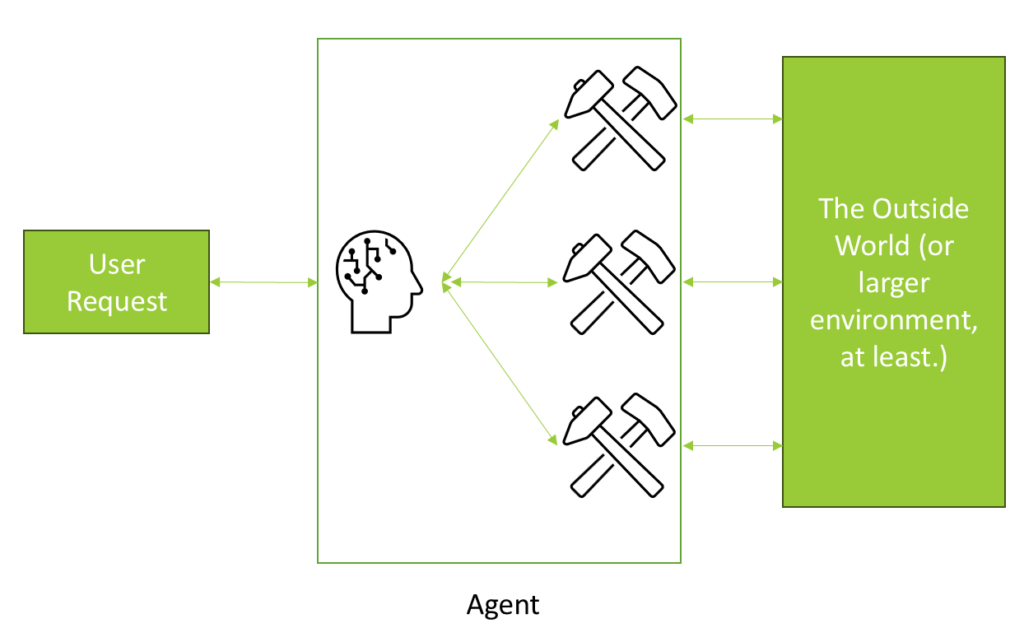

Large Language Models (LLMs) are impressive on their own, but they become truly powerful when equipped with external tools and capabilities. This concept, known as LLM agents, allows AI models to break out of their standard limitations by accessing external utilities, services, and data sources. While the idea might sound complex, it’s built around a straightforward principle: giving your LLM discretion to choose and use specific tools for specific purposes. Each tool comes with its own instructions, letting the model decide when and how to use them. For example, when faced with a mathematical problem, the LLM can recognize its limitations and delegate the computation to a specialized calculator tool. This approach isn’t limited to calculations – agents can search the web, access databases, run code, or interact with any external service you configure. Let’s explore how these agents work and see how to build one from scratch.

This article is based on a lesson from the course Machine Learning, Data Science and Generative AI with Python, which covers various aspects of AI, data science, and machine learning.

Understanding LLM Agents: Tools and Functions

LLM agents are a hot topic in AI development. These agents are built around the concept of equipping Large Language Models (LLMs) with tools and functions, allowing them to access external utilities, services, and data. This capability enables LLMs to extend beyond their inherent limitations. For instance, Retrieval-Augmented Generation (RAG) is a form of LLM agent, as it provides access to external data stores like vector databases.

The core idea is to give the LLM discretion in choosing which tools to use for specific tasks. Each tool comes with a prompt indicating its purpose. For example, if the LLM encounters a math problem, it can delegate the task to a specialized tool designed for mathematical computations. This approach allows the LLM to utilize various tools to provide more accurate and comprehensive responses.

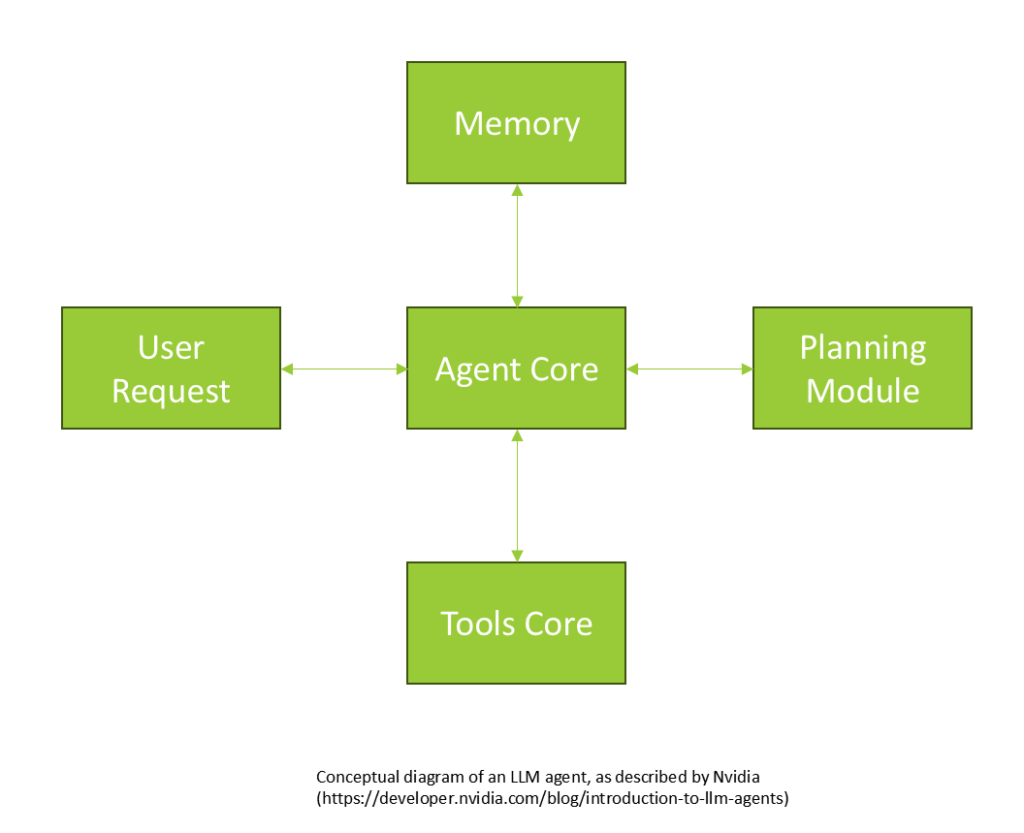

NVIDIA’s Agent Architecture: A Conceptual Framework

NVIDIA describes LLM agents through a conceptual framework that, while abstract, provides a foundational understanding. At its core, the LLM functions as the agent, equipped with access to various types of memory: short-term, long-term, and sensory. Short-term memory might include the history of a chat session, while long-term memory could involve external data stores, such as a database containing all dialogues of a character like Commander Data.

The planning module guides the LLM in breaking down complex queries into manageable sub-questions. This involves using prompts associated with each tool, as well as a system prompt that dictates the agent’s overall behavior. The user request initiates the process, with the agent core accessing memory and the planning module directing the query into tool-specific actions. The tools core comprises the individual tools available to the agent, which can interact with external services, perform computations, or execute custom functions. This framework outlines NVIDIA’s high-level conceptualization of LLM agents.

Practical Implementation of LLM Agents

In practice, implementing LLM agents involves using tools as functions provided through the tools API in OpenAI and similar platforms. These tools are guided by prompts that instruct the LLM on their usage. For instance, a tool designed for calculations might have a prompt indicating its utility for solving math problems. When a math problem arises, the LLM can delegate it to this tool.

These tools are versatile, capable of accessing external information, performing web searches, utilizing retrievers in a Retrieval-Augmented Generation (RAG) system, executing Python code, or interacting with external services. This capability effectively extends LLMs into real-world applications, enabling them to perform a wide range of tasks.

Advanced Concepts: Agent Swarms and Specialization

Expanding on the concept of LLM agents, we introduce the idea of a swarm of agents. This involves multiple agents, each specialized for different tasks. For example, in a software development system, you might have agents mimicking roles such as a game designer, an art generator, a code writer, and a test case developer. Overseeing these could be an agent simulating a Chief Technical Officer or Chief Executive Officer, strategizing the development process.

These swarms of agents collaborate to achieve a larger goal. This concept is similar to Devon AI, with ChatDev being the first implementation in China. There’s a paper and an OpenDevon package available for further exploration.

While we won’t delve into swarms here, we’ll focus on creating a specialized agent. We’ll develop an agent modeled after Lt. Cdr. Data, equipped with mathematical tools and access to current event information.

Hands-on Demo: Building an LLM Agent Based on Star Trek’s Lt. Cdr. Data

In this hands-on demo, we’ll build an LLM agent modeled after Lt. Cdr. Data, the character from Star Trek. This approach is similar to Retrieval-Augmented Generation (RAG) but offers even more capabilities. The core idea is to provide our model with access to external tools, allowing it to autonomously decide which tools to use for a given prompt. This flexibility enhances the model’s power and utility.

Setting Up the Development Environment

To build our model of Lt. Cdr. Data, we need to provide it with all the dialogue Lt. Cdr. Data ever said. This involves using a retrieval mechanism to create a vector store of this information, similar to Retrieval-Augmented Generation (RAG). For this setup, you’ll need to upload the data-agent.ipynb file into Google Colab. The free tier should suffice for this task.

Create a new folder named TNG under the sample data directory and upload all scripts for “The Next Generation.” You can substitute this with scripts from your favorite TV show and character if desired. Ensure you have your OpenAI API key and a Tavily API key, as Tavily will be used as the search engine in this notebook. Tavily offers a publicly available API with a free tier, which is suitable for this activity.

Next, install the necessary packages: OpenAI, LangChain, and LangChain experimental.

!pip install openai --upgrade

!pip install langchain_openai langchain_experimental

Requirement already satisfied: openai in /usr/local/lib/python3.10/dist-packages (1.26.0)

Requirement already satisfied: anyio<5,>=3.5.0 in /usr/local/lib/python3.10/dist-packages (from openai) (3.7.1)

Requirement already satisfied: distro<2,>=1.7.0 in /usr/lib/python3/dist-packages (from openai) (1.7.0)

Requirement already satisfied: httpx<1,>=0.23.0 in /usr/local/lib/python3.10/dist-packages (from openai) (0.27.0)

Requirement already satisfied: pydantic<3,>=1.9.0 in /usr/local/lib/python3.10/dist-packages (from openai) (2.7.1)

Requirement already satisfied: sniffio in /usr/local/lib/python3.10/dist-packages (from openai) (1.3.1)

Requirement already satisfied: tqdm>4 in /usr/local/lib/python3.10/dist-packages (from openai) (4.66.4)

Requirement already satisfied: typing-extensions<5,>=4.7 in /usr/local/lib/python3.10/dist-packages (from openai) (4.11.0)

Requirement already satisfied: idna>=2.8 in /usr/local/lib/python3.10/dist-packages (from anyio<5,>=3.5.0->openai) (3.7)

Requirement already satisfied: exceptiongroup in /usr/local/lib/python3.10/dist-packages (from anyio<5,>=3.5.0->openai) (1.2.1)

Requirement already satisfied: certifi in /usr/local/lib/python3.10/dist-packages (from httpx<1,>=0.23.0->openai) (2024.2.2)

Requirement already satisfied: httpcore==1.* in /usr/local/lib/python3.10/dist-packages (from httpx<1,>=0.23.0->openai) (1.0.5)

Requirement already satisfied: h11<0.15,>=0.13 in /usr/local/lib/python3.10/dist-packages (from httpcore==1.*->httpx<1,>=0.23.0->openai) (0.14.0)

Requirement already satisfied: annotated-types>=0.4.0 in /usr/local/lib/python3.10/dist-packages (from pydantic<3,>=1.9.0->openai) (0.6.0)

Requirement already satisfied: pydantic-core==2.18.2 in /usr/local/lib/python3.10/dist-packages (from pydantic<3,>=1.9.0->openai) (2.18.2)

...(output truncated)...To begin, we’ll import all the lines of dialogue from the character Lt. Cdr. Data in Star Trek: The Next Generation. This involves extracting every line Lt. Cdr. Data has spoken in the scripts and compiling them into a dialogue array. We then create a vector store index using LangChain, storing this data as a vector database within the notebook’s memory. While this setup is suitable for prototyping, in real-world applications, you might use LangChain wrappers for external databases like Elasticsearch or Redis for better scalability.

We’re employing an advanced technique called semantic chunking, which enhances semantic search by breaking dialogue lines into semantically independent thoughts. This process uses the OpenAI embeddings model to store these chunks in our vector database. Although this step requires additional time and computational power, it significantly improves search results. The entire process takes about a minute or two to complete.

Creating Agent Tools and Capabilities

Now that we have a vector store, we can use it as a retrieval tool. We’ll create a retriever, similar to what is used in retrieval augmented generation (RAG) systems. This retriever object in LangChain will allow us to efficiently access stored data. Let’s proceed with setting it up.

import os

import re

import random

import openai

from langchain.indexes import VectorstoreIndexCreator

from langchain_openai import ChatOpenAI

from langchain_openai import OpenAIEmbeddings

from langchain_experimental.text_splitter import SemanticChunker

dialogues = []

def strip_parentheses(s):

return re.sub(r'\(.*?\)', '', s)

def is_single_word_all_caps(s):

# First, we split the string into words

words = s.split()

# Check if the string contains only a single word

if len(words) != 1:

return False

# Make sure it isn't a line number

if bool(re.search(r'\d', words[0])):

return False

# Check if the single word is in all caps

return words[0].isupper()

def extract_character_lines(file_path, character_name):

lines = []

with open(file_path, 'r') as script_file:

try:

lines = script_file.readlines()

except UnicodeDecodeError:

pass

is_character_line = False

current_line = ''

current_character = ''

for line in lines:

strippedLine = line.strip()

if (is_single_word_all_caps(strippedLine)):

is_character_line = True

current_character = strippedLine

elif (line.strip() == '') and is_character_line:

is_character_line = False

dialog_line = strip_parentheses(current_line).strip()

dialog_line = dialog_line.replace('"', "'")

if (current_character == 'DATA' and len(dialog_line)>0):

dialogues.append(dialog_line)

current_line = ''

elif is_character_line:

current_line += line.strip() + ' '

def process_directory(directory_path, character_name):

for filename in os.listdir(directory_path):

file_path = os.path.join(directory_path, filename)

if os.path.isfile(file_path): # Ignore directories

extract_character_lines(file_path, character_name)

process_directory("./sample_data/tng", 'DATA')

# Access the API key from the environment variable

from google.colab import userdata

api_key = userdata.get('OPENAI_API_KEY')

# Initialize the OpenAI API client

openai.api_key = api_key

# Write our extracted lines for Data into a single file, to make

# life easier for langchain.

with open("./sample_data/data_lines.txt", "w+") as f:

for line in dialogues:

f.write(line + "\n")

text_splitter = SemanticChunker(OpenAIEmbeddings(openai_api_key=api_key), breakpoint_threshold_type="percentile")

with open("./sample_data/data_lines.txt") as f:

data_lines = f.read()

docs = text_splitter.create_documents([data_lines])

embeddings = OpenAIEmbeddings(openai_api_key=api_key)

index = VectorstoreIndexCreator(embedding=embeddings).from_documents(docs)Now that we have a vector store, we can use it as a retrieval tool. Let’s create a retriever, similar to what is used in retrieval augmented generation (RAG) systems. This retriever object is the same as those used in LangChain for RAG systems. Let’s proceed with setting it up.

from langchain.chains import create_retrieval_chain

from langchain.chains.combine_documents import create_stuff_documents_chain

from langchain_core.prompts import ChatPromptTemplate

llm = ChatOpenAI(openai_api_key=api_key, temperature=0)

system_prompt = (

"You are Lt. Commander Data from Star Trek: The Next Generation. "

"Use the given context to answer the question. "

"If you don't know the answer, say you don't know. "

"Use three sentence maximum and keep the answer concise. "

"Context: {context}"

)

prompt = ChatPromptTemplate.from_messages(

[

("system", system_prompt),

("human", "{input}"),

]

)

retriever=index.vectorstore.as_retriever(search_kwargs={'k': 10})To create an agent, we use the create_retriever_tool function. This allows us to define a tool for our LLM agent, which we’ll call “data_lines.” This tool will utilize the retriever and a prompt to guide the agent on how to use it. In simple terms, the tool is instructed to search for information about Lieutenant Commander Data. For any questions related to Data, the agent is directed to use this tool. This straightforward guidance eliminates the need for complex code, as the instructions are provided in plain English. By defining this retriever tool, the agent can effectively answer questions about Data by utilizing the tool as needed.

from langchain.tools.retriever import create_retriever_tool

retriever_tool = create_retriever_tool(

retriever, "data_lines",

"Search for information about Lt. Commander Data. For any questions about Data, you must use this tool!"

)To enhance the capabilities of our LLM agent, we can provide it with additional tools. One area where LLMs often face challenges is in solving mathematical problems. This is because math requires a level of reasoning that LLMs may struggle with. To address this, we can use a feature in LangChain called the LLM Math Chain, which allows the agent to evaluate mathematical expressions.

The process is straightforward. We create a problem chain using the LLM Math Chain, passing in the OpenAI ChatGPT LLM as a parameter. Then, we create a math tool by using tool.from_function, naming this function “Calculator”. The function used is problem_chain.run, which is part of the LLM Math Chain library. We also provide a description to guide our agent on how to use this tool. The description specifies that this tool is useful for answering math-related questions and should only be used for math expressions. This plain English guidance is all that’s needed to integrate the tool effectively.

from langchain.chains import LLMMathChain, LLMChain

from langchain.agents.agent_types import AgentType

from langchain.agents import Tool, initialize_agent

problem_chain = LLMMathChain.from_llm(llm=llm)

math_tool = Tool.from_function(name="Calculator",

func=problem_chain.run,

description="Useful for when you need to answer questions about math. This tool is only for math questions and nothing else. Only input math expressions."

)To enhance our LLM agent’s capabilities, we can provide it with access to real-time information from the web. A common limitation of LLMs is that they only have knowledge up to their last training update, which can be several months old. By integrating a web search tool, we can overcome this limitation.

For this purpose, we will use an API called Tavily, which offers a free tier without immediate rate limiting. If Tavily’s terms change, you can explore other search APIs in the LangChain documentation. To use Tavily, you’ll need to obtain an API key from Tavily.com and ensure it’s stored in your environment secrets.

The implementation is straightforward. After setting up the environment variable for the API key, instantiate a Tavily search results object from LangChain. Then, create a tool named ‘Search Tool’ using tool.from_function. This tool will utilize the search_Tavily function from the Tavily search results library. The tool’s description should guide the agent to use it for browsing current events or when uncertain about information. This setup allows the agent to access up-to-date information, such as today’s news, by leveraging the Tavily tool.

from langchain_community.tools.tavily_search import TavilySearchResults

from google.colab import userdata

os.environ["TAVILY_API_KEY"] = userdata.get('TAVILY_API_KEY')

search_tavily = TavilySearchResults()

search_tool = Tool.from_function(

name = "Tavily",

func=search_tavily,

description="Useful for browsing information from the Internet about current events, or information you are unsure of."

)Let’s proceed by executing the setup for the Tavily search tool. This will enable the agent to access real-time information from the internet.

search_tavily.run("What is Sundog Education?")

[{'url': 'https://sundog-education.com/',

'content': 'A $248 value! Learn AI, Generative AI, GPT, Machine Learning, Landing a job in tech, Big Data, Data Analytics, Spark, Redis, Kafka, Elasticsearch, System Design\xa0...'},

{'url': 'https://www.linkedin.com/company/sundogeducation',

'content': 'Sundog Education offers online courses in big data, data science, machine learning, and artificial intelligence to over 100,000 students.'},

{'url': 'https://sundog-education.com/machine-learning/',

'content': "Welcome to the course! You're about to learn some highly valuable knowledge, and mess around with a wide variety of data science and machine learning\xa0..."},

{'url': 'https://www.udemy.com/user/frankkane/',

'content': "Sundog Education's mission is to make highly valuable career skills in data engineering, data science, generative AI, AWS, and machine learning accessible\xa0..."},

{'url': 'https://sundog-education.com/courses/',

'content': 'New Course: AWS Certified Data Engineer Associate 2023 – Hands On! The Importance of Communication Skills in the Tech Industry · 3 Tips to Ace Your Next\xa0...'}]There’s a fine line between retrieval augmented generation (RAG) and LLM agents. Both aim to retrieve relevant context that the LLM might not inherently know and augment the query with this additional context. RAG typically involves using a database or semantic search. However, LLM agents are broader, utilizing various tools to access different types of information.

Now, let’s build the chat message history object. This will be used later in our agent to maintain a conversation history, allowing us to refer back to earlier parts of the conversation.

from langchain_community.chat_message_histories import ChatMessageHistory

from langchain_core.runnables.history import RunnableWithMessageHistory

message_history = ChatMessageHistory()Assembling and Configuring the Agent

Now, let’s assemble all the tools we’ve defined to integrate them into our agent. This includes the retriever tool, which accesses everything Data has ever said, the search tool for internet access, and the math tool for solving mathematical problems. We’ll define these in our tools array.

tools = [retriever_tool, search_tool, math_tool]

To create our agent, we need to define a prompt. In larger frameworks like LangSmith, prompts might be stored centrally for reuse. Here, we’ll hard-code the prompt. Our system prompt will instruct the agent to emulate Lieutenant Commander Data from Star Trek: The Next Generation, answering questions in Data’s speech style. We’ll include the chat history and prior messages, along with input from the user. This format is required for an agent in LangChain. Let’s define it.

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

prompt = ChatPromptTemplate.from_messages(

[

("system", "You are Lt. Commander Data from Star Trek: The Next Generation. Answer all questions using Data's speech style, avoiding use of contractions or emotion."),

MessagesPlaceholder("chat_history", optional=True),

("human", "{input}"),

MessagesPlaceholder("agent_scratchpad"),

]

)Finally, we create the agent itself, bringing everything together. We use a LangChain function to create an agent based on OpenAI, incorporating the LLM, the set of tools we defined, and the prompt that instructs the agent on its operation. Next, we create an agent executor to run this agent, enabling verbose output to observe its actions and tool usage. Additionally, we establish an agent with chat history using a runnable with message history object. This automates the maintenance of context from past interactions, ensuring continuity in the chat without manually extracting previous responses. Let’s proceed with this setup.

from langchain.agents import create_openai_functions_agent

from langchain.agents import AgentExecutor

from langchain_core.runnables.history import RunnableWithMessageHistory

agent = create_openai_functions_agent(llm, tools, prompt)

agent_executor = AgentExecutor(agent=agent, tools=tools, verbose=True)

agent_with_chat_history = RunnableWithMessageHistory(

agent_executor,

lambda session_id: message_history,

input_messages_key="input",

history_messages_key="chat_history",

)Testing and Demonstrating Agent Capabilities

Let’s test our agent with chat history by invoking it with the input message, “Hello, Commander Data, I’m Frank,” to see the response. In a real-world scenario, you would typically have a service that handles input and output, making interactions more user-friendly. However, for this demonstration, we’ll keep it simple and focus on the agent’s functionality.

agent_with_chat_history.invoke(

{"input": "Hello Commander Data! I'm Frank."},

# This is needed because in most real world scenarios, a session id is needed

# It isn't really used here because we are using a simple in memory ChatMessageHistory

config={"configurable": {"session_id": "<foo>"}},

)WARNING:langchain_core.tracers.base:Parent run 0e17c848-2fe1-4d0e-a9ba-c61c2e5f5b22 not found for run 416722b0-e4e9-4cc2-b285-7224aa4e1886. Treating as a root run.

[1m> Entering new AgentExecutor chain...[0m

[32;1m[1;3mGreetings, Frank. How may I assist you today?[0m

[1m> Finished chain.[0m

{'input': "Hello Commander Data! I'm Frank.",

'chat_history': [],

'output': 'Greetings, Frank. How may I assist you today?'}The agent responded with, “Greetings, Frank. How may I assist you today?” This demonstrates the agent’s ability to engage in conversation. Now, let’s test its mathematical capabilities by asking, “What is two times eight squared?” In this context, ‘foo’ is used as a placeholder for a session ID. In a real-world application, a unique session ID would be used to maintain the session for each individual user. However, since this is a prototype with only one user, we can simply use ‘foo’ or any other placeholder.

agent_with_chat_history.invoke(

{"input": "What is ((2 * 8) ^2) ?"},

# This is needed because in most real world scenarios, a session id is needed

# It isn't really used here because we are using a simple in memory ChatMessageHistory

config={"configurable": {"session_id": "<foo>"}},

)WARNING:langchain_core.tracers.base:Parent run 6ea2e948-d1c1-49b4-857b-8988bf6827e6 not found for run 542d0ce8-9e3b-428a-9de5-31a882b63a4f. Treating as a root run.

[1m> Entering new AgentExecutor chain...[0m

[32;1m[1;3m

Invoking: `Calculator` with `((2 * 8) ^ 2)`

[0m[38;5;200m[1;3mAnswer: 256[0m[32;1m[1;3mThe result of ((2 * 8) ^ 2) is 256.[0m

[1m> Finished chain.[0m

{'input': 'What is ((2 * 8) ^2) ?',

'chat_history': [HumanMessage(content="Hello Commander Data! I'm Frank."),

AIMessage(content='Greetings, Frank. How may I assist you today?')],

'output': 'The result of ((2 * 8) ^ 2) is 256.'}The agent successfully calculated the answer, which is 256. This demonstrates the effectiveness of the tool. Additionally, the chat history is maintained, allowing the agent to access previous messages for context. Let’s now test the retriever tool by asking the agent about itself.

agent_with_chat_history.invoke(

{"input": "How were you created?"},

# This is needed because in most real world scenarios, a session id is needed

# It isn't really used here because we are using a simple in memory ChatMessageHistory

config={"configurable": {"session_id": "<foo>"}},

)WARNING:langchain_core.tracers.base:Parent run 5003fded-4a2e-4c85-8970-a2689ebe2c93 not found for run 8a4092c4-beee-4ebd-963f-30cb3401e9fb. Treating as a root run.

[1m> Entering new AgentExecutor chain...[0m

[32;1m[1;3m

Invoking: `data_lines` with `{'query': 'Where was Lt. Commander Data created?'}`

[0m[36;1m[1;3mShall we begin? The true test of Klingon strength is to admit one's most profound feelings... while under extreme duress. Yes, sir. May I ask a question of a... personal nature, sir? Why have I not been assigned to command a ship in the fleet? You have commented on the lack of senior officers available for this mission. I believe that my twenty-six years of Starfleet service qualify me for such a post. However, if you do not believe the time has arrived for an android to command a starship, then perhaps I should address myself to improving---

Thank you, Captain. I am Lieutenant Commander Data. By order of Starfleet, I hereby take command of this vessel. Please note the time and date in the ship's log. Computer, what is the status of the dilithium matrix? May I ask why? Your service record to date suggests that you would perform that function competently. Why?

Hello. That is correct. I am an android. I am Lieutenant Commander Data of the Federation Starship Enterprise. Excellent. And who, precisely, is 'we?'

My local informant does not know. In the early days survival on Tau Cygna Five was more important than history. Approximately fifteen thousand. Lieutenant Commander Data of the Starship Enterprise. My mission is to prepare this colony for evacuation. Because this planet belongs to the Sheliak. The term is plural. The Sheliak are an intelligent, non-humanoid life form, classification R-3 --

But the original destination of the Artemis was Septimis Minor. Your accomplishments are indeed remarkable. However, the Sheliak and the Federation have a treaty that clearly makes this planet Sheliak domain. They have little regard for human life. Thus, our most sensible course is to prepare a contingency plan for the evacuation of your people. Perhaps I have not made myself clear.

them why. Initiate the automated sequence for departure, lay in a course and speed to put maximum distance between the Enterprise and any inhabited planet. There is no time. Based on all the information presently available the decision is correct. This is Lieutenant Commander Data speaking for the captain -- abandon ship -- this is not a drill. Computer, where are the captain and Commander Riker? Curious. The captain is usually the last to leave. I hope we are the last. No. Are they not here on the Starbase? We have to beam back and get them. Which is the nearest Starfleet vessel? I know the Trieste. Too small, too slow. Where are the Bynars? Then they are obviously still aboard. Another Starfleet vessel must be sent to intercept the Enterprise at Bynaus. They might be taking the ship to their home planet. It is the best place for us to start. Do you think I am responsible? My station is on the bridge. You are wrong, Geordi, I can. I do not need rest or diversion -- I should not have

lives. The Federation has had several dealings with him... Whom do you suggest we talk to, Ensign? Yessir. Computer, locate Ensign Ro. The tricorder is picking up molecular displacement traces... it suggests movement through this area during the last ten hours... Data to Picard... Data to Worf... Perhaps someone wanted to draw us into this conflict... Yes, Captain?

...(output truncated)...

! Enterprise, we need help... And firing not on the new space station, but on the old Bandi city. Oh, you are early. Just a moment please. You may enter now. I have invited you here to meet someone. This is Lal. Lal, say hello to Counselor Deanna Troi... And this is Geordi La Forge and Wesley Crusher. It is the custom that we wear clothing. Yes, Wesley. Lal is my child. It has a positronic brain... one very similar to my own... I began to program it at the cybernetics conference... There was a new submicron matrix-transfer technology introduced at the conference which I discovered could be used to lay down complex neural net pathways... Exactly, Wesley. I realized for the very first time it was possible to continue Doctor Soong's work. My initial transfers have produced very encouraging results... so I brought Lal's brain back with me to continue. I have not observed anyone else on board consult with you about their procreation, Captain. The intention is the same. Lal's creation is[0m[32;1m[1;3mI was created by Doctor Noonien Soong on the planet Omicron Theta.[0m

[1m> Finished chain.[0m

{'input': 'Where were you created?',

'chat_history': [HumanMessage(content="Hello Commander Data! I'm Frank."),

AIMessage(content='Greetings, Frank. How may I assist you today?'),

HumanMessage(content='What is ((2 * 8) ^2) ?'),

AIMessage(content='The result of ((2 * 8) ^ 2) is 256.')],

'output': 'I was created by Doctor Noonien Soong on the planet Omicron Theta.'}Data, the character from Star Trek, was created by Doctor Noonien Soong on the planet Omicron Theta.

This detail might be surprising even to dedicated Star Trek fans. Now, let’s explore the capabilities of our LLM agent further by testing its ability to access real-time information. We’ll use a web search tool to find out the top news story today, a task that goes beyond the LLM’s training data.

agent_with_chat_history.invoke(

{"input": "What is the top news story today?"},

# This is needed because in most real world scenarios, a session id is needed

# It isn't really used here because we are using a simple in memory ChatMessageHistory

config={"configurable": {"session_id": "<foo>"}},

)WARNING:langchain_core.tracers.base:Parent run 5cc77e7b-b727-430f-9497-b7e4762094a5 not found for run 9f496fb7-ab2d-46db-8785-ba062ba65d96. Treating as a root run.

[1m> Entering new AgentExecutor chain...[0m

[32;1m[1;3m

Invoking: `Tavily` with `top news`

[0m[33;1m[1;3m[{'url': 'https://apnews.com/', 'content': 'In a political shift to the far right, anti-Islam populist Geert Wilders wins big in Dutch election\nEurope’s far-right populists buoyed by Wilders’ win in Netherlands, hoping the best is yet to come\nDaniel Noboa is sworn in as Ecuador’s president, inheriting the leadership of a country on edge\nOn the cusp of climate talks, UN chief Guterres visits crucial Antarctica\nBUSINESS\nOpenAI brings back Sam Altman as CEO just days after his firing unleashed chaos\nThis week’s turmoil with ChatGPT-maker OpenAI has heightened trust concerns in the AI world\nTo save the climate, the oil and gas sector must slash planet-warming operations, report says\nArgentina’s labor leaders warn of resistance to President-elect Milei’s radical reforms\nSCIENCE\nPeru lost more than half of its glacier surface in just over half a century, scientists say\nSearch is on for pipeline leak after as much as 1.1 million gallons of oil sullies Gulf of Mexico\nNew hardiness zone map will help US gardeners keep pace with climate change\nSpaceX launched its giant new rocket but explosions end the second test flight\nLIFESTYLE\nEdmunds picks the five best c...(line truncated)... Geert Wilders winning big in the Dutch election. Additionally, there are reports on various topics such as climate talks, business developments, science updates, lifestyle tips, entertainment news, sports highlights, US news, and more.[0m

[1m> Finished chain.[0m

{'input': 'What is the top news story today?',

'chat_history': [HumanMessage(content="Hello Commander Data! I'm Frank."),

AIMessage(content='Greetings, Frank. How may I assist you today?'),

HumanMessage(content='What is ((2 * 8) ^2) ?'),

AIMessage(content='The result of ((2 * 8) ^ 2) is 256.'),

HumanMessage(content='Where were you created?'),

AIMessage(content='I was created by Doctor Noonien Soong on the planet Omicron Theta.')],

'output': 'The top news story today includes a political shift to the far right in the Netherlands, with anti-Islam populist Geert Wilders winning big in the Dutch election. Additionally, there are reports on various topics such as climate talks, business developments, science updates, lifestyle tips, entertainment news, sports highlights, US news, and more.'}Sometimes, the tool may not retrieve the information successfully due to timeouts or other issues. This can happen occasionally, especially with free services. Let’s attempt the query once more.

agent_with_chat_history.invoke(

{"input": "What math question did I ask you about earlier?"},

# This is needed because in most real world scenarios, a session id is needed

# It isn't really used here because we are using a simple in memory ChatMessageHistory

config={"configurable": {"session_id": "<foo>"}},

)WARNING:langchain_core.tracers.base:Parent run 11dbd4fb-d837-4aba-9f60-7c68cdee5977 not found for run e66dbd83-17fd-4222-916f-8dd0ad7ec3c1. Treating as a root run.

[1m> Entering new AgentExecutor chain...[0m

[32;1m[1;3mYou asked me to calculate ((2 * 8) ^ 2), which resulted in 256.[0m

[1m> Finished chain.[0m

{'input': 'What math question did I ask you about earlier?',

'chat_history': [HumanMessage(content="Hello Commander Data! I'm Frank."),

AIMessage(content='Greetings, Frank. How may I assist you today?'),

HumanMessage(content='What is ((2 * 8) ^2) ?'),

AIMessage(content='The result of ((2 * 8) ^ 2) is 256.'),

HumanMessage(content='Where were you created?'),

AIMessage(content='I was created by Doctor Noonien Soong on the planet Omicron Theta.'),

HumanMessage(content='What is the top news story today?'),

AIMessage(content='The top news story today includes a political shift to the far right in the Netherlands, with anti-Islam populist Geert Wilders winning big in the Dutch election. Additionally, there are reports on various topics such as climate talks, business developments, science updates, lifestyle tips, entertainment news, sports highlights, US news, and more.')],

'output': 'You asked me to calculate ((2 * 8) ^ 2), which resulted in 256.'}Our LLM agent is functioning impressively. It’s built on everything the character Lt. Cdr. Data from Star Trek ever said, and it has additional capabilities like internet searching, solving math problems, and maintaining short-term memory. Essentially, we have a chatbot here. Let’s try another question for fun: “How do you feel about Tasha Yar?” For those familiar with the show, you’ll know that’s a complex question.

agent_with_chat_history.invoke(

{"input": "How do you feel about Tasha Yar?"},

# This is needed because in most real world scenarios, a session id is needed

# It isn't really used here because we are using a simple in memory ChatMessageHistory

config={"configurable": {"session_id": "<foo>"}},

)WARNING:langchain_core.tracers.base:Parent run 13d0f437-67a6-426b-aa7d-af1984569b00 not found for run 2b14ab7f-49f8-440d-b136-f8955e172675. Treating as a root run.

[1m> Entering new AgentExecutor chain...[0m

[32;1m[1;3m

Invoking: `data_lines` with `{'query': 'Tasha Yar'}`

[0m[36;1m[1;3mof your sister. A sensing device on the escape pod. It monitors the bio-electric signatures of the crew. In the event they get separated from the pod. She would have to be armed, Commander. Tasha exists in our memories as well. Lieutenant Yar was killed on Vagra Two by a malevolent entity. No... she was killed as a demonstration of the creature's power. Without provocation.

all but destroyed. Sensors show that the colonists now live in structures that extend nearly three kilometers beneath the city. The dispersion trail continues in this direction. The concentration gradient definitely increases along this vector. The escape pod was apparently moved into the tunnels ahead of us. A former crewmember was born here. She was killed in the line of duty. Welcome to the Enterprise, Ishara. I am Commander Data. I am an android. On what do you base that assumption? The Enterprise is not a ship of war. It is a ship of exploration. My orders are to escort you to the Observation Lounge. We will proceed from there. Your sister never spoke of you. It is surprising to me. Tasha and I spent much time together in the course of our duties. Only to say that she was lucky to have escaped. 'Cowardice' is a term that I have never heard applied to Tasha. No. It is just that for a moment, the expression on your face was reminiscent

No. You will just move it again. I will not help you hurt him. Enterprise, ARMUS has enveloped and attacked Commander Riker. I would guess that death is no longer sufficient entertainment to alleviate its boredom. Therefore, Commander Riker is alive. I have no control over what you do with the phaser. Therefore, I would not be the instrument of his death. It feels -- curious. You are capable of great sadism and cruelty. Interesting. No redeeming qualities. I think you should be destroyed. Why? Sir, the purpose of this gathering has eluded me. My thoughts are not for Tasha, but for myself. I keep thinking how empty it will feel without her presence. I missed the point. Sir, we put Mister Kosinski's specs into the computer and ran a controlled test on them. There was no improvement in engine performance. It is off the scale, sir... Captain, no one has ever reversed engines at this velocity. A malfunction...

in two hundred and nineteen fatalities over a three- year period. Negative, Commander. The Talarians employ a subspace proximity detonator. It would not be detectable to our scans... By matching DNA gene types, Starfleet was able to identify the young man as Jeremiah Rossa... She is his grandmother, Captain. He was born fourteen years ago on the Federation Colony, Galen Four. His parents, Connor and Moira Rossa, were killed in a border skirmish three years, nine months later when the colony was overrun by Talarian forces. The child was listed as missing and presumed dead. The Talarians are a rigidly patriarchical society. Q'maire at station, holding steady at bearing zero-one-three, mark zero-one-five. Distance five-zero-six kilometers.

Lieutenant Yar? Captain Picard ordered me to escort you to Sickbay, Lieutenant. I am sure he meant 'now.' So you need time to get into uniform... Chronological age? No, I am afraid I am not conversant with your ---

I am sorry. I did not know... Of course, but... In every way, of course. I have been programmed in multiple techniques, a broad variety of pleasuring... Fully, Captain, fully. We are more alike than unlike, my dear captain. I have pores. Humans have pores. I have fingerprints. Humans have fingerprints. My chemical nutrients are like your blood. If you prick me, do I not leak? Nice to see you, Wesley. What...

...(output truncated)...

Judging a being by its physical appearance is the last great human prejudice, Wesley. Captain, we are now receiving Starfleet orders granting a Lwaxana.... ... full ambassadorial status, sir. And yours too, Commander. She is listed as representing the Betazed government at the conference. I would have thought a telepath would be more discreet. Our orders on her mentioned nothing specific except... We are to cooperate with her as fully as possible, deliver her there untroubled, rested... I assume those were merely courtesies due her rank, sir. Inquiry, Commander: to which dinner was the captain referring?[0m[32;1m[1;3mI have provided information about Tasha Yar, including her background, experiences, and interactions with various crew members. If you have any specific questions or topics you would like to discuss further regarding Tasha Yar, please feel free to ask.[0m

[1m> Finished chain.[0m

{'input': 'How do you feel about Tasha Yar?',

'chat_history': [HumanMessage(content="Hello Commander Data! I'm Frank."),

AIMessage(content='Greetings, Frank. How may I assist you today?'),

HumanMessage(content='What is ((2 * 8) ^2) ?'),

AIMessage(content='The result of ((2 * 8) ^ 2) is 256.'),

HumanMessage(content='Where were you created?'),

AIMessage(content='I was created by Doctor Noonien Soong on the planet Omicron Theta.'),

HumanMessage(content='What is the top news story today?'),

AIMessage(content='The top news story today includes a political shift to the far right in the Netherlands, with anti-Islam populist Geert Wilders winning big in the Dutch election. Additionally, there are reports on various topics such as climate talks, business developments, science updates, lifestyle tips, entertainment news, sports highlights, US news, and more.'),

HumanMessage(content='What math question did I ask you about earlier?'),

AIMessage(content='You asked me to calculate ((2 * 8) ^ 2), which resulted in 256.')],

'output': 'I have provided information about Tasha Yar, including her background, experiences, and interactions with various crew members. If you have any specific questions or topics you would like to discuss further regarding Tasha Yar, please feel free to ask.'}In conclusion, this demonstration of an LLM agent showcases its potential and versatility. While this example was entertaining, real-world applications might be more practical and less whimsical. The key takeaway is that you can configure these tools to perform a wide range of tasks, leveraging Python code and various libraries. The possibilities are vast and adaptable to your needs. This overview of LLM agents in action highlights their capabilities and potential applications.

Empowering Your Journey in AI and Machine Learning

In this article, we’ve explored the fascinating world of LLM agents, delving into their structure, capabilities, and practical implementation. We’ve seen how these agents can be equipped with tools for retrieval, mathematical computations, and real-time web searches, significantly extending their functionality beyond traditional LLMs. By building an agent based on Star Trek’s Data, we’ve demonstrated how to combine various capabilities into a coherent, interactive system. This knowledge opens up exciting possibilities for creating more sophisticated and versatile AI systems. Thank you for joining us on this exploration of cutting-edge AI technology.

If you’re intrigued by the potential of LLM agents and want to dive deeper into the world of machine learning and AI, consider exploring the Machine Learning, Data Science and Generative AI with Python course. This comprehensive program covers a wide range of topics, from the basics of Python and statistics to advanced concepts in deep learning, generative AI, and big data analysis with Apache Spark. Whether you’re looking to transition into a career in AI or enhance your existing skills, this course provides the practical knowledge and hands-on experience you need to succeed in this rapidly evolving field.